Storage is not a simple math problem, and there is more possibility between data reliability, cost, and performance.

-

![2022122603052518]() Cache

CacheThe word "Cache" is French in origin, which is explained as "safekeeping storage" by the author of an electronic engineering journal paper in 1967. Intel was the pioneer of using cache to break the bottleneck of the memory speed and launched the 80386 Processor in 1985.

"Cache" was initially only referred to as temporary storage. However, its concept has now been expanded. The cache can also be used between memory and hard disk rather than just between CPU and memory. Therefore, a cache " is a hardware or software component that stores data so that future requests for that data can be served faster. " the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere...

Here is the data transfer speed of the storage controller in descending order: memory>flash>disk> tape. The disk speed is much lower than that of the CPU, so to coordinate their difference, computer systems use part of the memory space for cache in the computer system, called disk cache.

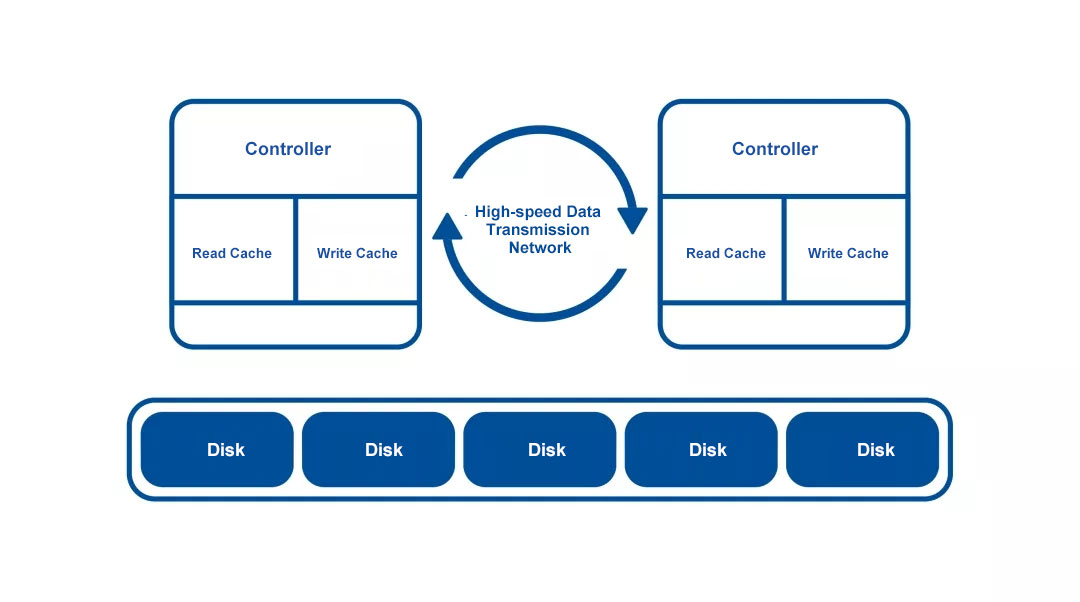

For the same reason, we insert memory for the cache between the storage controller and the back-end disks group. The memory often used here is DRAM (Dynamic Random-access Memory) or SDRAM (Synchronous Dynamic Random-access Memory).

Both the computer system's disk cache and the storage system's memory cache can be further divided into read cache and write cache.

-

Read Cache

When the storage system receives a read I/O request, it will first look for data in the read cache. An ideal situation is Cache Hit when the requested data can be found in the cache. Otherwise, Cache Miss occurs. To read data, the storage controller has to initiate a read I/O command to the back-end disk/hard disk, which increases time latency. Take disk as an example, additional time is needed for seeking, rotational latency, and data transfer.

By dividing "cache hits" by "cache hits + cache misses," we can get the cache hit ratio. The read cache is usually small for the entire storage system, so it can only store a certain amount of data, which has to be replaced with the data on the back-end storage controller according to the specified strategy. For the LRU (Least Recently Used) strategy, it will be removed soon if the cached data is not read immediately. The random read will further increase the load of the read cache.

-

![2022122603054718]() Write Cache

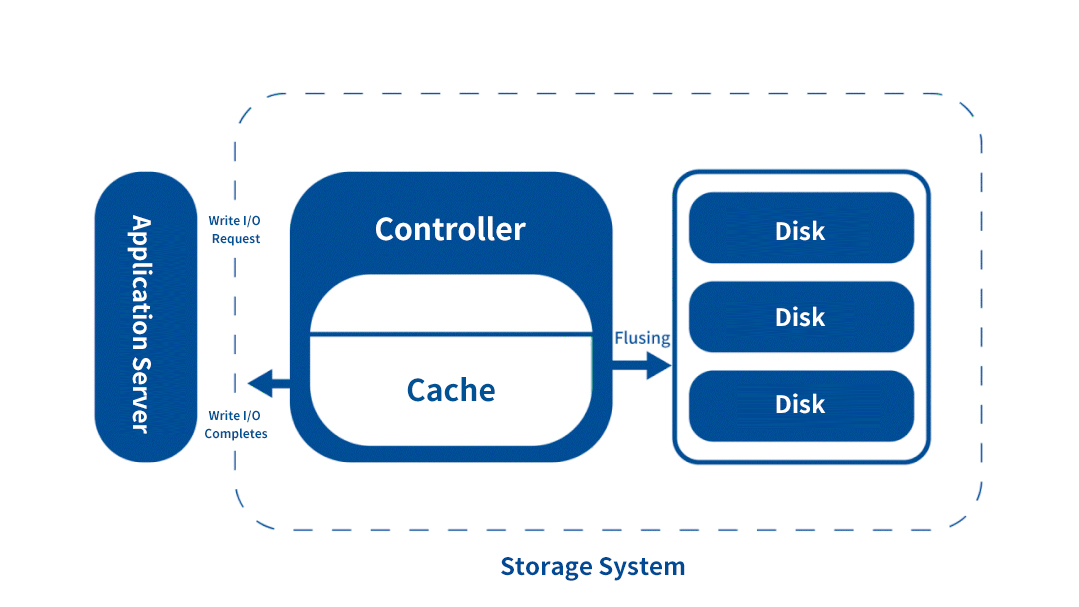

Write CacheThere are two strategies for the write cache of the storage system, Write-through and Write-back.

For the Write-through strategy, when a write I/O request is received, data is updated to the cache and back-end disk/hard disk simultaneously. This process is simple and ensures data consistency between cache and memory, but it increases time latency and takes up more bandwidth. Moreover, the performance will also be seriously affected when the data of the same address needs to be updated frequently.

For the Write-back strategy, when an I/O write request is received, the data is updated only in the cache, and a write completion command is returned. Therefore, it will be fast because data is not actually written to the back-end device.

When the data on the write cache reaches a certain amount, this strategy will enable the storage controller to write it to the back-end disk/hard disk, called "flushing". The storage system usually supports two "Flushing" algorithms, "Demand-based" and "Age-based".

The "Demand-based" algorithm sets a fixed percentage of the used cache space as a threshold. Once the data on the write cache reaches the threshold, "Flushing" will be executed. The "Age-based" algorithm can determine how long the data on the write cache needs to be temporarily stored before "flushing".

It should be noted that the cached data will be lost permanently and cannot be recovered during a system power outage before or during "Flushing". In addition, the Write-back strategy can only improve performance when the load of the storage system is low. When the load is high, the write cache space will be quickly filled, which makes "Flushing" frequently operated, significantly reducing system performance.

-

The Best Balance between Performance, Capacity and Cost

As a famous saying goes, every coin has two sides. A cache can improve system performance but comes with a risk of data loss. Indeed, we can enhance cache hits ratio by expanding cache capacity, but there will be an increase in cost. Therefore, it is necessary to find an optimal balance between performance, capacity and cost in practical applications.

Then, there is a question for us: is it inevitable that we have to sacrifice reliability and cost for performance? Storage is not a simple math problem, and there is more possibility between data reliability, cost, and performance. And undoubtedly, storage technology keeps advancing, providing us with more choices in the future.